The Hubble Tension describes the discrepancy between measurements of the early and late universe, of the current rate of cosmic expansion. The initial cause of this tension was assumed to be systematic, with many thinking that some error in our measurements could be eliminated by better telescopes and better data, but following James Webb Space Telescope (JWST) results many believe that something more mysterious might be at play. Do we need new physics outside of cosmology’s standard model? In this piece, Marco Forgione explores recent attempts to resolve the tension and highlights the role of philosophy when science can’t make its mind up.

The best tool that scientists have for describing the history and structure of our universe is called the Lambda Cold Dark Matter model (ΛCDM). Thanks to this model we can describe (among other things) the acceleration of the universe, its large-scale structures, temporal evolution, and the many different forms of radiation we observe with our telescopes. However, the model is also characterized by six free-parameters that need to be added “manually” since they cannot be determined by theory only. One such parameter is the expansion rate of the universe (also: Hubble’s constant (H0)), which is given by the relationship between the velocity of a cosmological object receding from us and its distance.

The existence of this parameter is not new in the literature, since it was already in 1927 that Hubble was able to give a first H0 estimate of 500(km/s)/Mpc as a relation between redshift and galaxy distance. However, it was soon found that such a value implied a universe that was only 2 billion years old; not enough. With the data from the Hale-Telescope in 1950s, Humason, Mayall, and Sandage calculated a value for the Hubble constant of approximately 180(km/s)/Mpc, while a few years later, in 1958, Sandage and collaborators placed the value of H0 between 50 and 100(km/s)/Mpc. The task was clear, but its execution was impeded by inadequate technology. Yet, even with the development of better instruments, the discrepancy between measured values of H0 persisted and it became known as the 50-100 controversy in the 1970s and 1980s (see: Tully 2024).

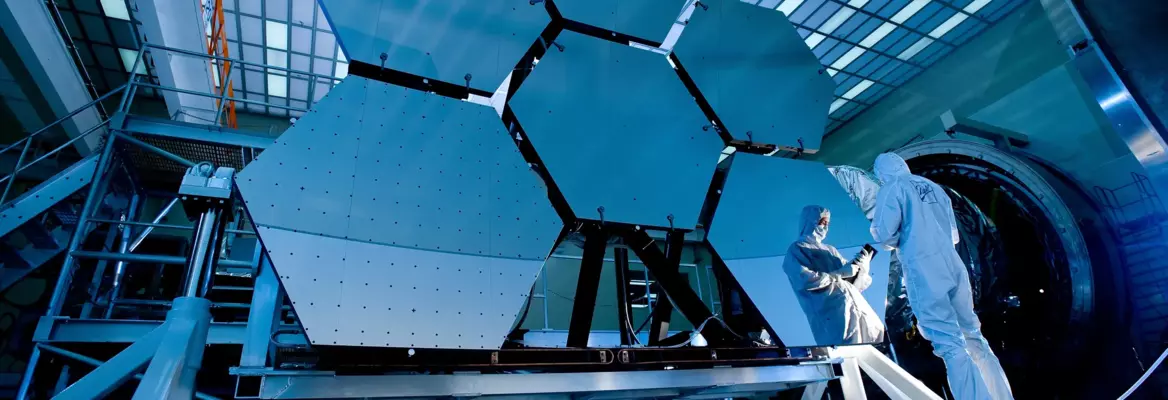

Then, the launch of the Hubble Space Telescope (HST) and the HST key project study in the 1990s used Cepheid stars to calibrate the distance to Supernovae Type Ia in distant galaxies. The project delivered a value for the Hubble parameter of 72±8(km/s)/Mpc. It seemed that the H0 tension was appeased.

___

Each rung of the distance-ladder method comes with systematic uncertainties

___

Then, with the Planck mission (2009-2013), observations of the Cosmic Microwave Background radiation (CMB) aligned well with the predictions of ΛCDM, but with a Hubble constant of 67.5±0.5(km/s)Mpc: a difference of about 5sigma with the previous results.

How is it possible that cosmologists and astrophysicists are measuring the same parameter but they are obtaining significantly different results? Better analyses and measurements seem to confirm this tension, which is one of the reasons why philosophers started looking into the matter from an epistemological perspective.

Figure 1. Plot showing previous attempts to measure the Hubble Constant H0. (Source Verde, 2019).

Join the conversation