What separates science from pseudoscience? The distinction seems obvious, but attempts at a demarcation criterion - from Popper's 'falsifiability' to Langmuir's 'pathological science' - invariably fail, argues Michael D. Gordin.

A good place to start is a scholarly urban legend whose provenance is uncertain: “Nonsense is nonsense, but the history of nonsense is a very important science.” This statement is attributed to Saul Lieberman, a legendary Talmudic scholar, ostensibly when he was introducing the even more legendary Gershom Scholem. Maybe Lieberman said it; maybe he didn’t. Regardless, the content of the statement is far from being nonsense and opens up both a puzzle and a clue to its solution.

The puzzle is how we define what nonsense is in the first place. This is a central question of our moment, beset as we are with conspiracy theories and allegations of “fake news” and miracle cures. Indeed, it has been a central question ever since humanity began organizing its beliefs about knowledge: once you start that process, you need a way of dividing reliable claims from dubious ones. It’s not a solely academic problem. Everyone routinely sifts incoming information into (at least) two piles — we just don’t agree on how to do it. There are countless domains in which the puzzle is confronted.

Here, I will focus on determining what counts as a “pseudoscience.” Since being scientific is arguably the highest status our culture can assign to a knowledge claim, the contested boundary between things that we consider science and those other things that look like sciences but just don’t quite make it is especially fraught. The name for the puzzle in this context is the “demarcation problem,” a term coined by philosopher Karl Popper, and his proposed solution — the “falsifiability” demarcation criterion — remains the most famous.

This has been a central question ever since humanity began organizing its beliefs about knowledge: once you start that process, you need a way of dividing reliable claims from dubious ones.

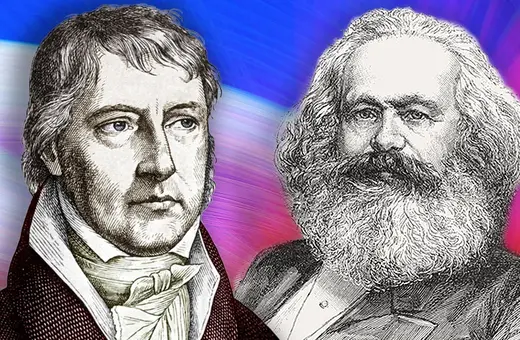

Here is how he formulated it in 1953, at its first public outing: “One can sum up all this by saying that the criterion of the scientific status of a theory is its falsifiability, or refutability, or testability.” That is, if you propose that a contention is scientific, but you are unable to formulate a test that it could fail — not that it does fail the test (then it would be false), but that it is possible to fail it — then the claim merely seems to be scientific but isn’t, i.e., it is pseudoscientific. You have to risk failure in order to be scientific. This definition has made it into middle-school textbooks and is very widespread among those who have opinions about the subject. It is pretty good on some counts: Freudian psychoanalysis and Marxist “scientific socialism” don’t do very well in terms of falsifiability, as every imaginable data point can be assimilated by advocates into confirming the theory. Totalizing theories don’t risk anything.

Otherwise, though, Popper’s demarcation criterion doesn’t work especially well. Besides some technical epistemological problems, the biggest concern is whether it parses the sciences in the right way. Indeed, this is a test we want any conceivable demarcation criterion to pass. We want our criterion to recognize as scientific those theories which are very generally accepted as hallmarks of contemporary science, like quantum physics, natural selection, and plate tectonics. At the same time, we want our criterion to rule out doctrines like astrology and dowsing that are almost universally labeled pseudosciences.

Join the conversation