Drawing inspiration from the natural rhythms of the human brain, researchers are exploring how periods of "sleep" could enhance artificial intelligence learning capabilities and prevent the problem of catastrophic forgetting, writes Darcy Bounsall.

For around eight hours each night - roughly a third of our lives in total - we exist in a state of unconscious paralysis. Our heartrate and breathing slow, our body temperature falls, and we lie immobile and unresponsive. But as we slowly progress through the stages of sleep something strange begins to happen: our brain activity shoots back up to levels similar to when we’re awake. Unbeknownst to us, as we drift off, our brains are spontaneously reactivating all that we’ve experienced in our waking states.

SUGGESTED READING

Why AI must learn to forget

By Ali Boyle

SUGGESTED READING

Why AI must learn to forget

By Ali Boyle

There is a vast interdisciplinary literature spanning both psychology and neuroscience that supports the vital role sleep plays in learning and memory. Now a recent study undertaken by Maxim Bazhenov and his colleagues at the University of California San Diego has shown that artificial neural networks also learn better when combined with periods of off-line reactivation that mimic biological sleep.

___

One weakness of many of these AI models is that, unlike humans, they are often only able to learn one self-defined task extremely well.

___

Artificial neural networks loosely model the neurons in a biological brain, and are currently one of the most successful machine-learning techniques for solving a variety of tasks, including language translation, image classification, and even controlling a nuclear fusion reaction.

One weakness of many of these AI models is that, unlike humans, they are often only able to learn one self-defined task extremely well. When trying to learn multiple different tasks, they have a tendency to abruptly override previously learned information in a phenomenon known as catastrophic forgetting. In contrast, the brain is able to learn continuously and typically learns best when new training is interleaved with periods of sleep for memory consolidation.

___

DeepMind very explicitly cite biology as an inspiration for their own version of sleep when it comes to their artificial neural networks.

___

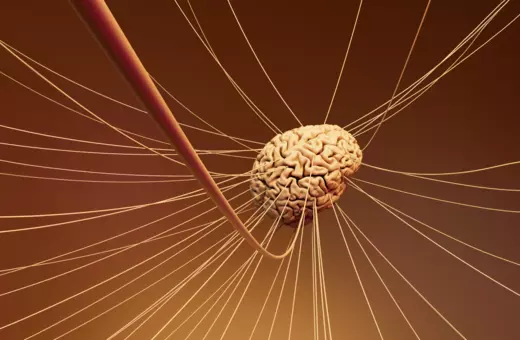

Memories are thought to be represented in the human brain by patterns of what is called synaptic weight — the strength or amplitude of a connection between two neurons. When we learn new information, neurons fire in a specific order which can either increase or decrease synapses between them. When we are asleep, the spiking patterns learned when we were awake are repeated spontaneously in a process called reactivation or replay. This typically happens in the hippocampus, and it’s been best studied with respect to 'place cells' - cells that are only active when the animal or person is in a particular location within an environment. When you carry on recording these neurons when animals go to sleep, you see the same patterns of activity replay.

DeepMind very explicitly cite biology as an inspiration for their own version of this process when it comes to their artificial neural networks. In their Deep Q-Networks paper, where they first beat Atari games, they 'replayed' previous experiences in a shuffled order. The effect of this is to 'decorrelate' the inputs so that learning generalises more effectively. Normally, you experience events in a particular order - but that's mostly just coincidence. By shuffling the experiences in this way during learning, you can get rid of these spurious correlations.

Join the conversation