Recently, the IAI released Bernardo Kastrup’s piece, ‘The Lunacy of "Machine Consciousness"' where he reflected on a recent disagreement with Susan Schneider at January’s IAI Live debate ‘Consciousness in the machine’. Susan Schneider responds to Bernardo Kastrup’s critique of her position, and argues for the ‘wait and see’ approach to machine consciousness.

The idea of conscious AI doesn’t strike me as conceptually or logically impossible—we can understand Asimov’s robot stories, for instance. I’ve discussed this matter in detail after mulling over various philosophical thought experiments. This doesn’t mean conscious machines will walk the Earth or even that synthetic consciousness even exists in the universe. For an idea can be logically consistent and conceptually coherent but still be technologically unfeasible.

Instead, I take a Wait and See Approach: we do not currently have sufficient information to determine whether AI consciousness will exist on Earth or elsewhere in the universe. Consider the following:

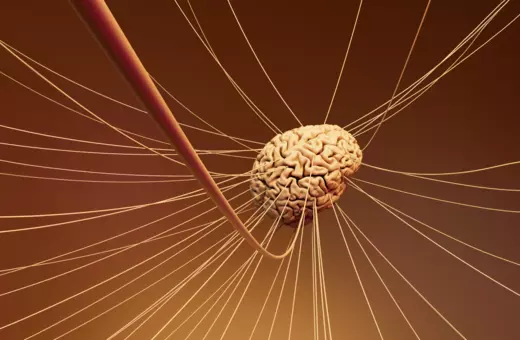

a) We do not understand the neural basis of consciousness in humans, nor do we have a clear, uncontroversial philosophical answer to the hard problem of consciousness—the problem of why all the information processing the brain engages in has a felt quality to it. This makes a theoretical, top down approach to building machine consciousness difficult.

b) We don’t know if building conscious machines is even compatible with the laws of nature.

c) We don’t know if Big Tech will want to build conscious machines due to the ethical sensitivity of creating conscious systems.

d) We don’t know if building conscious AI would be technologically feasible — it might be ridiculously expensive.

In my interview, I stressed that if humans need a top down theory of how to create conscious AI, we are in big trouble. For humans to deliberately create conscious AIs based on a theoretical understanding of consciousness itself, we would need to create it using a recipe we currently haven’t discovered, and with a list of ingredients that we may not even be able to grasp.

SUGGESTED READING

All-knowing machines are a fantasy

By Emily M. Bender

SUGGESTED READING

All-knowing machines are a fantasy

By Emily M. Bender

In a recent piece for the IAI, after we took part in a debate ‘Consciousness in the machine’, Bernardo Kastrup accuses me of not merely claiming that conscious machines are logically or conceptually possible but of making the stronger claim that conscious machines are technologically feasible and compatible with the laws. (See his rant about the “Flying Spaghetti Monster”). But as you can see, I’m not saying this: I’m advocating the Wait and See Approach.

But why do I have a “wait and see approach” at all, rather than following Bernardo in rejecting the possibility of conscious AI altogether? I have a variety of reasons. First, since the jury is out on the above issues, the Wait and See Approach seems warranted. Second, I see several paths to the deliberate construction of conscious AI, assuming it is compatible with the laws of nature to do so. For example, here are two:

Consciousness Engineering

On this path, conscious AI is engineered by biological or artificial superintelligences that know the recipe. A superintelligence is an entity that surpasses human intelligence in every respect: scientific reasoning, moral reasoning, etc. Perhaps a (non-conscious) super intelligent AI will eventually be built on Earth, and it will want to build conscious AI.

Join the conversation