The troubling legacy of medical racism is still alive today. A recent study found that people of colour were 29% less likely to get local anaesthesia for minor surgery compared to white patients. In the quest to harness Artificial Intelligence in healthcare, medical racism has crept into the algorithms that shape medical decisions today. Arshin Adib-Moghaddam, traces these deep-seated biases of healthcare systems all the back to the Enlightenment era and race science, but offers hope and a blueprint for eliminating corrupt data moving forward.

Can we trust our hospitals and doctors? Is medicine a neutral science? These are some of the questions that need to be addressed before Artificial Intelligence (AI) is fully integrated into our health-care sector. As I have argued in a new book: the AI algorithms governing our life are prone to the mistakes of the past. Every single aspect of contemporary society, certainly in highly technologized settings such as health care, banking and education, is already affected and increasingly shaped by AI. Unfortunately, a painful history of discrimination and outright racism against minorities is part of that process, including in sciences considered to be “neutral” such as medicine.

___

We have to understand that in the birthplaces of western modernity, certainly also in the United States, medicine evolved in close conjunction with the “science” of racism.

___

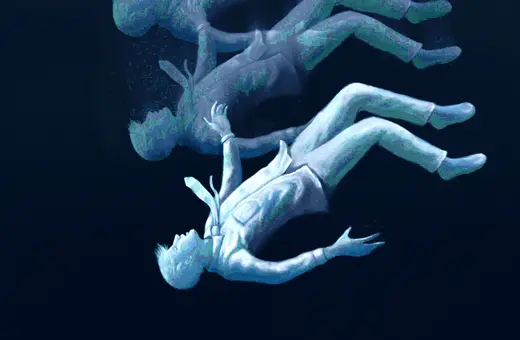

Racism as a “science” was a distinct invention of the European Enlightenment and western modernity more generally. In laboratories stacked with skulls of homo sapiens, the idea was concocted that the (heterosexual) “White Man” was destined to save humanity from the barbarism of the inferior races. In short, medical racism framed and enabled Empire and colonialism. For instance, James Marion Sims, a 19th century surgeon widely considered as one of the founders of modern gynaecology, furthered a treatment for vesicovaginal fistulas, a condition that affects bladder control and fertility in women. In his experiments between 1845-1849, Sims carried out surgeries on a dozen slave women without using any anaesthetic. He believed the common misconception at the time that Black people could endure more pain than white people. This view is still present in the field of medicine and feeds into the data of AI algorithms. For example, recent research has shown that a prominent health care algorithm that determines which patients need more medical attention, favoured white patients over black patients whose condition was worse and who had more severe chronic health issues.

SUGGESTED READING

AI and the end of reason

By Alexis Papazoglou

SUGGESTED READING

AI and the end of reason

By Alexis Papazoglou

The idea that Black patients are thought to have a higher pain threshold, then, is rooted in the insidious “data” that we inherited from the European enlightenment. Indeed earlier in 2023, a British MP authored a Women and Equalities report which determined that racism is a major cause of massively higher maternity death rates for black and disadvantaged women in the United Kingdom. Further research shows that white employees in the health care sector are less likely to believe reports of pain by black patients and therefore less likely to give them appropriate pain relief, in comparison to white patients with a comparable condition. Another study by the Center for Disease Control and Administration in the United States investigated the medical records of nearly 57,000 adults who had surgery between 2016-2021. It demonstrated that people of colour were 29% less likely to get regional anaesthesia in comparison to white patients.

Join the conversation